I started out my career in moving images back in 1986, as a cataloguer. I worked at the National Film Archive (as it then was), describing the films and television programmes that we acquired by country, title, date, creators and performers. The films were indexed according to subject – we used the Universal Decimal Classification system, or UDC – and on occasion were shotlisted in detail. We were a professional and dedicated team in the cataloguing department, proud of our work, and able to serve general, specialist and commercial enquirers according to need. We weren’t able to keep up entirely with the level of acquisition or, more particularly, the inherited backlog, but backlogs were a part of what made an archive in any case.

Those days are gone. Considering each film in turn and in detail, generating thousands of subject index cards, indulging in the minutiae of shotlisting, producing a catalogue that people had to come and see at our London office or else they would have little idea about what we held at all: all that would not be sustainable now. It was another age.

We weren’t blind to the rise of computer technologies. On the contrary, we had our first computer entry system in place by the mid-80s, using it first to generate a non-fiction print catalogue before adapting it into a database which was maintained in parallel with the paper-based system. We could see the obvious advantages of electronic systems for bringing together common fields to the ideal discovery mechanism. We saw ourselves as always managing such systems. But time moves on.

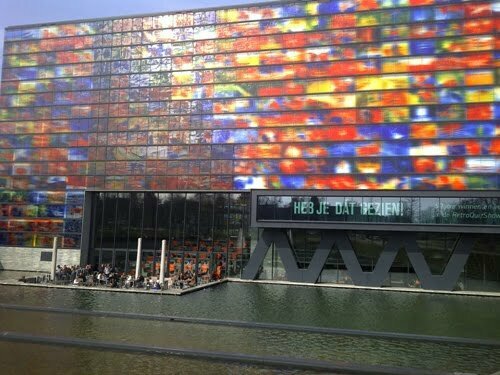

It is 2013, and now I find myself at the FIAT/IFTA seminar Metadata as the Cornerstone of Digital Archiving, held at the Netherlands Institute for Sound and Vision in Hilversum, 16-17 May. Essentially its theme was how we are building the automated, self-generating broadcast archive, and where our role as humans lies in this as the machines themselves become ever smarter, learning from ourselves and from the very content that they manage.

Metadata – such an ugly word – is generally defined to mean ‘data about data’. It is structured information. When you produce a Word document and save it, the properties state what type of document it is, when it was produced, when it was subsequently edited, who wrote it, how many words it uses, and so on. That is metadata. When you take a photograph with your phone or digital camera, you capture not only the image but an array of information about that image – when it was taken, with what make of camera, where it was taken, with what settings. That is metadata.

Digital objects requires this intelligence so that they can be stored and read again. Such intelligence also enables us to collate, sort and build up resources based on digital objects with common information. That is what a database does, and our world – from Google upwards – is driven by databases. So metadata matters.

Half of the FIAT seminar was devoted to core metadata such as this. It is hugely important for broadcast archives, now that production workflows and preservation needs are predominantly digital, to get their data in order. The issues are complex, and look to be breeding a new kind of moving image archivist best able to deal with them, but the essential details are well-understood, with guidelines such as PREMIS developed by the Library of Congress literally setting the standards.

But it was the other half that most interested me – the metadata that describes the content rather than the carrier, and the degree to which such metadata is now being generated automatically. Digital objects come embedded with huge amounts of information about themselves, particularly in the audiovisual field, and we are only just starting to to learn how to extract such information and make it reusable.

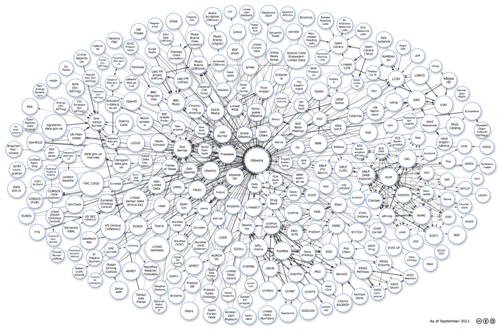

For example, a lot was said about subtitles. Many television programmes are broadcast with a subtitle stream included as part of the digital signal, designed for the hard of hearing. Such subtitles are graphics rather than text (i.e. they are bitmap images), but through a process of optical character recognition (OCR) they can be converted into word-searchable text. That gives you a handy account of everything that was said in a programme, but you can also match those words to dictionaries and thesauri, such as DBpedia, the Web community’s source of structured information derived from Wikipedia. These keywords can then be employed to enable searches to be made across different datasets, linking your TV programmes to other programmes, or other information sources. This is what they called Linked Data, the mechanism that lies at the heart of Tim Berners-Lee’s vision for the next stage of development for the World Wide Web, the Semantic Web.

Linked Data works on a principle of particular Web standards: http, uniform resource indicators or URIs, and the RDF metadata model that joins up information in sets of triples as subject-predicate-object e.g. “Charles Dickens [subject] is the author of [predicate] Great Expectations [object]”. The details need not concern us here. What is interesting is to see how the principles can be applied not just to an obvious data stream such as subtitles, but to other sets of information contained within audiovisual media.

I’ve already written here about the great potential of speech-to-text technologies for opening up speech archives. The various means by which a digital speech track can be converted into readable text have come very much to the fore of late, and there was a real sense at the seminar of this being the next leap forward for broadcast archives. What can be done with subtitles can potentially be done with any speech file – including radio, of course. There were three impressive demonstrations of speech-to-text in action. Yves Raimond of BBC R&D showed how the World Service digital archive is gained enriched descriptions through a mixture of rough speech-to-text generating keywords (rather than transcripts – such technologies seldom produce perfect transcripts) which were then enhanced through ‘crowdsourcing’ i.e. getting volunteers to improve them. Sarah-Haye Aziz of Radiotelevisione Svizzera (Italian-speaking Swiss TV) showed how they had adopted speech-to-text to improve in-house indexing of their programmes. And Xavier Jacques-Jourion of Belgian broadcaster RTBF demonstrated the hugely impressive GEMS, a prototype for a semantic-based multimedia browser. What that means is that the system links data extracted from both traditional sources and speech-to-text, then combines it with Linked Open Data via a smart graphical user interface, or web page to you and me. So a programme which talks about parks (which might be covered by a regular catalogue record) in which trees are discussed (which might not be in a regular catalogue record) is linked automatically to other web resources about trees, contextualising the tree that appears in the programme you are searching. Likewise for events, locations, persons and so on. It’s called entity extraction, and it is going to loom large in our lives, and soon.

But that’s just the start of it. Cees Snoek of the Intelligent Systems Lab at the University of Amsterdam talked us through the practicalities and possibilities of image searching. This is the holy grail for many in the Web world – and many in the surveillance and security industries as well. It’s easy for a computer to find an image if you tell it what that image signifies through adding a description (that’s how Google Images works), but it’s a lot harder for that computer to recognise what a image means without any textual help. It just sees patterns. So the task is to train computer to discriminate between such patterns, training it to understand the co-ordinates of a particular shape and what that signifies, then being able to recognise similar objects elsewhere. We were shown how a computer had been trained to recognise boats by working from a set of images that showed boats, and others that didn’t. It found a lot of boats, but also thought an oil platform was a boat, and likewise a car driving on a wet road.

Systems like this can be found online, but it’s where Snoek took us next that start to make the mind boggle. Why might not a machine analyse a video to make a sentence, or description, from what it sees? By training computers with ‘concept vocabularies’ (ideas rather than simple words) the machine would be able to link together intelligently the images that it sees contained within that video. The video would essentially describe itself.

Who will need cataloguers now?

And there was more. Sam Davies of BBC R&D introduced us to their work on mood-based classification of broadcast archives. By getting actual human beings (they still have their uses) to watch a set of programmes and judge which parts of them were happy, sad, serious, angry etc, and matching such classification to the digital patterning of those programmes, you start to have a system which can be applied to a vast corpus of broadcast content and thereby tell you which programmes are comedies, news, thrillers, or more particularly which programmes excite which kinds of emotions in people. Davies told us that the next step was to combine this means of determining the emotion-led content of programmes with other meaningful data – the affective and the semantic. The machine will know not just what was meant, but what we will feel about what was meant.

So what role is there for humans in the world of machine-generated intelligence? There was much muttering among the archivists in the audience who wanted to see skill and judgment valued above the random nature of automatic extraction and linking. There were some interesting figures bandied about. Sarah-Haye Aziz reckoned that in the future 90% of routine cataloguing would be done by machines, 10% high quality work by humans. Lora Aroyo of Free University Amsterdam, talking about the ingenious Dutch crowdsourcing tool Waisda?, which gets members of the public to tag archive TV programmes as a game, found that when it came to getting the public to choose subject terms, 8% occured in the professional vocabularies of archivists and cataloguers, 23% were to be found in the Dutch lexicon, and 89% were found on Google. People and archivists don’t speak the same language.

They don’t have to speak the same language, of course. Cataloguing is about discriminating between different types of information, and applying skill to how you describe what is before you. It is more trustworthy information. But traditional catalogues belong to a different era, one where the cultural institution knew best. That’s no longer case, or at least not necessarily so. Cultural organisations want to have a much closer, sharing relationship with their public. Projects such as the public tagging of art for the Rijksmmuseum and the UK’s Your Paintings (both covered here previously) point the way. Of course both have proper cataloguing descriptions underpinning those works of art, and have simply opened up to the public new ways of classifying their collections by subject or mood. No one is asking the public to tell them who the painter might be, or who the programme maker was. That’s the 10% that we must leave to the human specialist, for the time being. But the remaining 90% will be decided by the machine. And it is getting more and more intelligent at doing so.

Links:

- Details of the speakers and themes of the FIAT/IFTA metadata seminar are here

- Slideshow presentations from the seminar can be found at http://www.slideshare.net/beheerderbeeldengeluid

- Find out more about Linked Data and the Linked Open Data (LOD) map at http://linkeddata.org and see what the UK government is doing about releasing its data in this form at http://data.gov.uk/linked-data

- The BBC R&D (Research & Development) site is a good place for finding out where broadcast technologies are taking us in terms of production, description and delivery

- Welcome to the Machine, a 2008 post of mine on The Bioscope blog, speculates on the future of audiovisual archiving in a digital world, inspired by another conference held at the Netherlands Institute for Sound & Vision.

In the knowledge base on audiovisual archiving we keep track of the interesting work on image searching and automatic concept detection by Cees Snoek : http://www.avarchivering.nl/node/615 .

The Netherlands Institute for Sound & Vision (Beeld en Geluid) has posted reports on the two days, by Ian Matzen:

Day 1: http://www.beeldengeluid.nl/node/7833

Day 2: http://www.beeldengeluid.nl/node/7834