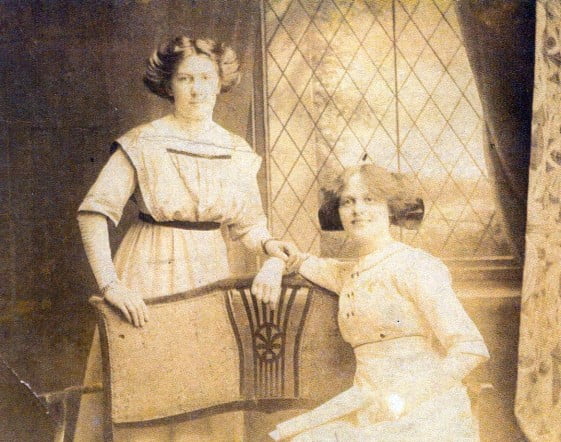

Here is a family photograph. I know who the one on the left is, but not the one on the right, and I long to know more. If only she could tell me something about herself. If only she could talk.

Well, one day maybe not so far away, she will.

I’ve been writing about colourisation in its various forms, most recently about the Colourise app which has enabled many to use basic colourisation to convert black-and-white photographs into colour, of a sort. I mocked the idea by trying out the software on stills from modern feature films shot in monochrome, but it is all too tempting to upload that old photograph from black-and-white days, and see family or friends past given some extra semblance of convincing life, a twitch to the corpse.

But colour won’t be enough – we will want movement, and speech. On the former, things are advancing at a significant rate. Deepfake software has caused much alarm over its potential to fool people into believing a public figure is saying something that they have never said, as demonstrated by the viral video of Jordan Peele impersonating the voice of Barack Obama over a video of Obama apparently mouthing those very words. Such fears have yet to be translated into the actual world of news, though last year there was worry about a fake video of Donald Trump mocking climate change, produced by a Belgian political party. What was interesting about this was not that it was a convincing recreation of Trump, designed to fool, but that it was not. The Trump figure was an obvious piece of fakery, and the video’s commentary admitted as much (“we all know that climate change is fake, just like this video”, ‘Trump’ tells us). Yet some people still fell for it, complaining bitterly about the American president’s prejudice. The story shows that visual authenticity is a complex business. We can be fooled by the palpable fake as much as the plausible fake, if we are so minded.

Dali lives (and how it was done)

More convincing, and more indicative of what comes next, is a video released the other day in which Salvador Dali asks you to take a selfie with him. The video has been created by superimposing sythesis of Dali’s facial expressions over an actor the same size as the artist, with texts taken from interviews and letters voiced by another actor (in English, French and Spanish). It has been created for the Dalí Museum in St. Petersburg, Florida, which is hosting an exhibition entitled Dali Lives. It is guaranteed that much more of this sort of thing will follow, as the cost of it falls. Imagine visiting a stately home or historical site in the near future, and instead of being greeted by an actor in costume you have Henry VIII telling you his side of the story, then each wife telling you theirs. Add extra dollops of AI, and you’ll be holding conversations with them.

Such videos can be created because there is a substantial bank of images of the subject, from which the software can derive convincing verisimilitude in motion. Famous people from modern times have been photographed countless times, but nowadays we are all photographed countless times and could be similarly reanimated, except that we’re still here and we video ourselves constantly as well. What would be the point?

But what of those for whom only a few images exist, or even a single image? And what if that image were not even a photograph, but a painting? Can they be brought back to life?

How Realistic Neural Talking Head Models are made

This week there have been reported of an algorithm developed in Russia which pretty much does this. Created by the Samsung AI Centre in Moscow and the Skolkovo Institute of Science and Technology, the technology takes a few images of a subject and fuses them into a credible speaking version by training it via the VoxCeleb dataset of human speech amassed by the Visual Geometry Group at Oxford University.

The Realistic Neural Talking Head Models (as they call them) have been mostly created using eight or so images, but it is possible to work with fewer. The most startling outcome of the project is what can be done with a single image, as demonstrated towards the end of the YouTube video above. They have made fleeting talking models from single famous photographs of Marilyn Monroe, Albert Einstein, Fyodor Dostoyevsky – and Salvador Dali. Then they go further. In the video’s most astonishing coup, we see Kramskoi’s ‘Portrait of an Unknown Woman’ and then the ‘Mona Lisa’ each turned into three talking versions of themselves.

They are not actually talking (though they must have been given words to mouth at least), but you can see how this could be developed. For a famous dead person, you could imagine them speaking words they once wrote. Anne Frank could read us her diary; Jane Austen could trigger an explosion in a new kind of audio-visual e-book; Shakespeare would welcome us to Stratford in David Mitchell’s voice.

But even the obscure could be given words to say. There might be diaries or letters that they wrote, and if nothing survives then they could be given words appropriate to their time, locality, class and preoccupations. Their avatars could be fed some basic facts about their lives, and then the algorithms would do the rest, creating the semblance of a life much as colourisation is now doing. It would be as fundamentally meaningless as colourisation is, but how we would love to be deluded. If it is not them, then it could be them. It is more than a black-and-white photograph, trapped in its moment of time, which tells me so little. It has a life.

Scary as the implications are, such things will come to pass, we can have no doubt. Aside from the obvious ethical and legal conundrums (what happens when someone makes a video of you saying something you never said?), all sorts of issues are raised, for me, about the archival status of images, whether still or moving. The idea that an archival image should be understood as the output of a particular time and technology, to be understood best in its primary state, could be changing. While one still needs an ur-image from which to create a digital derivative, it is what is derived that may end up supplying that which is meaningful to us.

The archival digital image may come to mean that which can be inferred from it. I don’t think anyone has coined the phrase before now, so I shall do so – it’s the inferred image. Through deep learning techniques, the image will relay all that previously we imagined it might do when we stared at it and wished that it could tell us more. This could profoundly alter our sense of what we collect, what it signifies, and how it should be understood.

Of course, the inherent manipulability of the digital image, with all that that means for the understanding of truth and significance, has been long understood, from William J. Mitchell’s The Reconfigured Eye: Visual Truth in the Post-photographic Era (1992) onwards. The digital image has profoundly changed our sense of what an image means, simply because it is not an end point but a starting point, a row of numbers understood through code, inviting us to tease out its implications.

The question that arises is who owns these inferred meanings? Our audiovisual archives, by which I mean still and moving image (and sound), are filled with objects whose digital lives may be about to alter dramatically. We’re already seeing several television programmes riding on the back of the colourisation coup of Peter Jackson’s They Shall Not Grow Old, which made soldiers of the First World War seem to live anew in colour and speech. The business is bound to expand. There has long been manipulation of images by producers once they have got their hands on the object from the archive. The change comes when it is the archive that does the manipulation, or is at least complicit in such activity. Such archives could be on the verge of a new stage in their existence, caring no longer simply for passive objects but for active ones. That which they hold has the potential to mushroom into multiple lives, feeding our demand for an ever more tangible connection with the past. It could end up being an obligation, to unlock the potential in the digitised image, as standard. The audiovisual archive of the future may be an unbounded virtual world.

The woman on the right of the photograph at the head of this post could be my great-grandmother, Susanna. We just don’t know. There are no other pictures, and no clues within the photograph, except that it would be her sister on the left. If she could talk, what would I have her say? If she said, “Yes, that is who I am”, would I believe her? After a time, perhaps I would.

Links:

- A paper on the Russian project, by Egor Zakharov, Aliaksandra Shysheya, Egor Burkov, Victor Lempitsky, ‘Few-Shot Adversarial Learning of Realistic Neural Talking Head Models‘, is published on arXiv

- The Register explains how it all works, in relatively simple terms, and worries about the implications, which ArsTechnica, in ‘Deepfakes are getting better—but they’re still easy to spot‘ gives background history on deepfakes and says none of this will be coming to the general user any time soon

- ‘Deepfake Salvador Dalí takes selfies with museum visitors‘ – The Verge explains the background to the Dali Lives exhibition (which opened on 11 May 2019)

- A Belgian Political Party Is Circulating A Trump Deepfake Video‘ – Buzzfeed on the Belgian Trump fake video

Update (February 2021):

Sure enough, that which was experimental has become commercial. Family history business MyHeritage has come up with the Deep Nostalgia app, which converts still photographs into animated portraits (https://www.myheritage.com/deep-nostalgia). It works best with people looking directly at the camera. It’s noticeable that the app tries to make your ancestor smile. So here is Susanna, maybe.

I understand the people who animate images by Muybridge or Marey, since they were meant to capture motion. I also understand the project which animated Lilienthal’s photos, since there were so many of them. So far I have not seen a convincing case for making other still pictures move. One person who posts on silent movie groups in facebooks likes to take photos of Theda Bara and others and make their eyes blink. I don’t see the point. I wonder if anyone was inspired by the moving photos in the Harry Potter stories.

I think the only case is that people will want to see it happen. Its surface appeal will be the fun of seeing that which is static move, then putting voices to those faces for whatever purpose (amusement, malice, political intent, advertisement, comfort). The deeper urge is, I think, that feeling we get when we see an old photograph and want to recover the life that it fixed for a split second. Nostalgia, I guess. That’s why I included the family photograph.

Or maybe it is just the Harry Potter effect.

I did rather like the Salvador Dali thing because it was ‘classy’. Like someone in the video said, I, too felt goosebumps.

Dali was presumably ‘a character’ and you couldn’t be quite sure what he would do or say. He couldn’t be allowed to exist today, so even to see him in re-animated form is a bit of a thrill.

Many people feel that the past was in some ways better than the present (think of the opening sequences of Life on Mars for a quite sublime expression of that).

The thought that perhaps some of the essence of the past could be preserved, or better grown in a culture in a computer, and released again is tantalising – that’s why I’m quite enthusiastic about enhancing old footage. The medium of ‘a person’ whose personality is captured by AI is another way to do this. I think this is a more fascinating idea than simply craftsmen and performers creating high tech automata.